What if a botched Google search card says you are a serial killer | Trendsmedia.

Many of us have come to heavily rely on Google Search and often don’t question the veracity of information Google cherry-picks from the vast data available on the world wide web for its search cards. This incident, which is one part funny and two parts scary, makes it clear that Google’s Knowlege Graph may not be as sacrosanct as you may have believed.

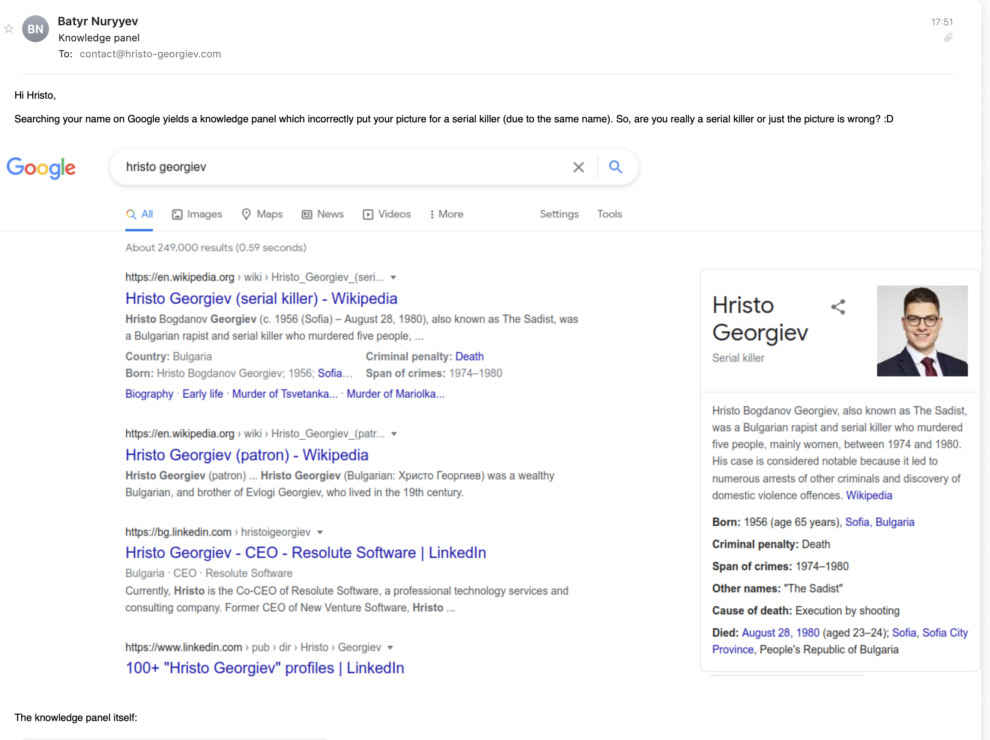

Hristo Georgiev was informed by a former colleague that a Google search of his name returned a Google Knowlege Graph that depicted his photo and linked it to a Bulgarian rapist and serial killer of the same name, also known as ‘The Sadist’, who murdered five people back in the 1970s and was later executed by shooting.

The graph linked the info to a Wikipedia article, which incidentally had no link to any of Georgiev’s profile or his image. It was Google’s algorithms that erroneously matched the two. What’s even more problematic is that Hristo Georgiev is not a unique name and is shared by hundreds of other people.

As Georgiev notes in his blog, such search bungles can have serious implications - “The fact that an algorithm that's used by billions of people can so easily bend information in such ways is truly terrifying.

The rampant spread of fake news and cancel culture has made literally everyone who's not anonymous vulnerable. Whoever has a presence on the internet today has to look after their "online representation". A small mistake in the system can lead to anything from a minor inconvenience to a disaster that can decimate careers and reputations of people in a matter of days”

The issue was highlighted by the Hacker News community and has since been fixed. As community members point out, this wasn’t a one-off incident where Google botched up its cards. Apparently, there have been errors as grave as listing the wrong symptoms or describing easily treatable maladies as "Incurable".

That’s even more of a problem since Google Assistant authoritatively reads from these cards to answer voice searches.

What we can take away from the whole incident is that to err is not just human, and to not take anything algorithms put together at face value.

Via

Credit to Digit.in

No comments